Deep Dive 3: Nvidia (NVDA) - The Technologist

Up Next week: Groq, a private company that is a specialized chip maker that may IPO soon; recent valuation ~$3B.

Today: Nvidia (NVDA).

Reminder: TechIQ is not investment advice. This is meant as a unique service that does detailed dives into technology, rather than high level summaries available everywhere and likely written by AI. You can expect about one report a week.

Lede

Today we do a deep dive into Nvidia. Nvidia isn’t just a hardware powerhouse—it’s built an entire ecosystem that fuels today’s AI revolution and its software moat is often under appreciated by investors - but certainly not by customers.

Tell It to Me Like I'm 11-Years Old

Imagine you have a supercharged calculator that can solve thousands of math problems all at once—that’s what Nvidia makes.

When people talk about AI (artificial intelligence), they mean computer programs that learn to do cool things like talk, draw, or drive cars.

Teaching these programs is like having to solve millions of homework problems, and Nvidia’s chips make that super fast.

A normal computer (CPU) is like one smart kid working on one problem at a time.

In contrast, Nvidia’s special chips (GPUs) work like an entire class of thousands of students all solving problems together at once.

Plus, Nvidia created a special "language" called CUDA that makes it really easy for programmers to give instructions to these chips.

This powerful combination helps everything from video games to medical tools work faster and smarter.

Preface: What Nvidia Does

Nvidia is a technology company that designs chips and software for several major markets: gaming, data centers, professional visualization, and automotive.

It started out making GPUs to render video game graphics (making games look realistic), and it still leads in that area for PC gamers and console graphics.

However, its role has expanded hugely into AI and data centers.

Today, Nvidia’s GPUs are the workhorses of artificial intelligence research and cloud computing.

Companies buy Nvidia’s data-center GPUs (like the A100, H100, etc.) by the thousands to train AI models and power services like cloud AI, recommendation systems, or self-driving car brains.

This data center segment has become Nvidia’s largest revenue stream by far – for example, in early 2024 about 87% of Nvidia’s revenue came from data center products (mostly AI chips) (Source) dwarfing its gaming GPU revenue.

In one record quarter, Nvidia’s data center business pulled in $33 billion while its gaming division did about less than $4 billion (Source).

Nvidia also sells chips and platforms for professional visualization (like high-end graphics workstations for movie studios, designers, and engineers) and for automotive (it supplies systems-on-chip for autonomous driving and smart car features).

These are smaller portions of its business, but they leverage the same GPU technology and AI expertise.

Importantly, Nvidia’s dominance isn’t just about hardware; it also provides the software drivers, libraries, and tools (like CUDA, cuDNN for deep learning, etc.) that make its GPUs easier to use.

This robust software ecosystem forms a protective moat, locking in developers and setting a high barrier for competitors who would need to replicate both the hardware and the integrated software stack.

This full-stack approach – serving everything from hardware to software – has given Nvidia a strong lock-in and a leadership position.

Whether it’s enabling blockbuster video game visuals or the latest AI chatbot in the cloud, Nvidia is often behind the scenes, turning electricity into intelligence and images.

Displacing Nvidia would mean developing a GPU that not only significantly outperforms its current offerings but also supports the vast, entrenched software ecosystem—especially CUDA—that developers rely on, making backward compatibility an absolute must.

In short, a new entrant would have to deliver a breakthrough in both hardware and software to overcome Nvidia’s decade-long head start and its nearly unassailable position in AI infrastructure (Source; Source).

Technical Deep Dive: How Nvidia’s Technology Works

Historical Evolution of Nvidia’s GPU and AI Technologies

Easy Button: Nvidia’s GPUs have come a long way from simple graphics cards to the AI engines they are today.

Early on, GPUs were focused on drawing images fast for games.

Around 2006-2007, Nvidia introduced CUDA – a way to use GPUs for general computing – and researchers realized these chips could crunch more than just graphics.

Let’s walk through some key generations and why this matters for the stock price:

• Fermi (2010): Nvidia’s Fermi architecture was a big step in making GPUs friendly for supercomputing.

It added things like ECC memory (error-correcting code memory, which improves reliability) and better scheduling so GPUs could handle big scientific calculations without errors (Source).

Fermi basically said, “GPUs aren’t just for games; we can do serious computing too,” which attracted high-performance computing and early AI work.

• Kepler (2012): Built on Fermi’s success, Kepler introduced clever features to run many tasks at once more efficiently.

One was Dynamic Parallelism, meaning GPU threads (think of them as tiny workers) could create more workers on the fly without asking the CPU for help (Source).

Another was Hyper-Q, which let multiple CPU cores feed work to a single GPU simultaneously (Source).

These changes improved GPU utilization and made GPUs even more appealing for tasks like deep learning (notably, researchers trained the breakthrough AlexNet neural network on Kepler GPUs in 2012, which kicked off the deep learning boom (Source).

• Pascal (2016): This generation was laser-focused on AI and computing.

Pascal GPUs (like the Tesla P100) introduced NVLink, a super-fast connection between GPUs (faster than PCIe) that let multiple GPUs in a server share data much quicker (Source).

It also used HBM2 (High Bandwidth Memory) stacked right next to the GPU, massively increasing memory bandwidth (how quickly data can be fed to the GPU) (Source) Pascal GPUs even added support for half-precision (FP16) math, which doubled the speed for many AI calculations without much loss in accuracy (Source).

All this meant you could train bigger neural networks faster and start scaling AI training across many GPUs.

• Volta (2017): Volta (Tesla V100 GPU) was a game-changer for AI. It introduced Tensor Cores – special units designed specifically to accelerate the kind of math used in neural networks (matrix multiplications) at mixed precision (Source).

With Tensor Cores, Volta could perform deep learning calculations several times faster than Pascal per GPU.

Volta also improved NVLink (even more bandwidth) (Source) and came with 32 GB of memory on high-end models (huge at the time) (Source).

This was the first GPU truly billed as an “AI processor,” and it indeed ushered in the era of GPUs as the default choice for AI training.

• Ampere (2020): Ampere (e.g., A100 GPU) built upon Volta’s ideas – more Tensor Cores, added support for even lower precisions (like INT8/INT4 for inference), and had up to 80 GB of ultra-fast HBM2e memory.

It brought features like Multi-Instance GPU (MIG), allowing one physical GPU to be split into several smaller virtual GPUs to serve many users or tasks at once, which is great for cloud providers.

• Hopper (2022) and beyond: Hopper (H100 GPU, covered more below) continued the trend with even more AI-focused enhancements, such as the Transformer Engine to accelerate transformer model training (common in language AIs) and support for FP8 precision to speed up training large models.

• Blackwell (2025) and beyond: Building upon the advancements of the Hopper architecture, Nvidia's Blackwell architecture has now arrived, delivering substantial enhancements in AI performance.

Blackwell introduces a second-generation Transformer Engine with new micro-tensor scaling techniques for FP4 precision, doubling the performance of next-generation AI models while maintaining high accuracy.

These innovations position Blackwell as a significant leap forward in handling complex AI algorithms.

In summary, each GPU generation introduced innovations that made parallel computing easier or faster – more memory, more bandwidth, new math units, better multi-GPU connectivity – which together enabled the explosion in AI capabilities we see today.

Nvidia methodically evolved its GPUs from graphics engines into the backbone of modern AI, generation by generation.

GPUs: The Core of Nvidia’s AI Dominance

Easy Button: GPUs have thousands of small cores that work simultaneously, unlike CPUs with only a few powerful cores.

This massive parallelism makes GPUs ideal for handling the repetitive, large-scale computations required to train AI models.

Another reason Nvidia’s GPUs dominate AI is continued innovation in architecture.

Nvidia added Tensor Cores starting with Volta (2017) – these are like mini-matrix-multiplication engines inside the GPU that can multiply matrices (the core of neural network math) much faster than using regular CUDA cores.

For example, Tensor Cores can do lower-precision math (like FP16 or INT8) extremely quickly, which is perfect for AI where some precision can be traded for speed.

By the time of the Ampere and Hopper generations, these Tensor Cores were so advanced that the GPU could perform dozens of TeraFLOPs (trillions of operations per second) focused purely on AI calculations.

Nvidia also developed high-speed interconnects like NVLink that let multiple GPUs talk to each other at high bandwidth (Source) – critical when big AI models are spread across many GPUs.

Essentially, Nvidia architected its GPUs not just to draw images, but to become computational powerhouses for any parallel task.

The result is that for training AI models or doing big scientific simulations, a system with 4 or 8 Nvidia GPUs can replace dozens or even hundreds of traditional CPU-only servers in terms of speed.

In short: GPUs are great for AI because they do many simple things at once really fast. Nvidia leveraged this by creating GPUs and software that make it easy to use all that parallel power.

That’s why if you peek into an AI supercomputer, you’ll see racks full of Nvidia GPU boards doing the heavy lifting.

Hopper and Blackwell Architectures

Easy Button: Hopper (H100) is Nvidia’s state-of-the-art GPU architecture (as of its release in 2022), and Blackwell is the next generation with hyperscalers starting ordering and deploying Blackwell-based solutions from early 2025.

These architectures are named after pioneering computer scientists (Grace Hopper and David Blackwell), and they carry Nvidia’s GPU design to new heights for AI tasks.

The Hopper H100 GPU introduced several advances over its predecessor (Ampere A100).

First, it has a beefed-up Tensor Core design with what Nvidia calls the Transformer Engine – essentially hardware support for new low-precision formats (like FP8) that are especially useful for training transformer models (which power language AIs like GPT) (Source) (Source).

By dynamically using 8-bit precision in some parts of the computation, H100 can train models faster while still keeping accuracy high.

The H100 also sports 80 GB of HBM3 memory (fast high-bandwidth memory) on each card, and extremely high memory bandwidth (~3 TB/s) to keep those thousands of cores fed with data. (Source)

This means larger AI models can fit in memory and be processed quickly.

Additionally, Hopper introduced NVLink 4 and NVLink Switch System – further improving multi-GPU connectivity so that servers with multiple H100s can function even more like a single giant GPU, sharing data at 900 GB/s speeds in NVLink clusters (Source).

In terms of raw performance, an H100 can deliver up to ~1 petaflop (1e15 operations per second) of mixed-precision compute.

It’s an absolute beast designed to churn through AI training workloads that were nearly impractical before.

Blackwell, the next-gen (out now), is poised to push this even further. Hyperscalers have started ordering and deploying Blackwell-based solutions from early 2025.

Blackwell GPUs are the fifth generation with Tensor Cores, adding support for new data formats (even lower precision or novel compressed formats) to squeeze more speed out.

Each generation also tends to shrink transistor size.

Hopper architecture utilizes TSMC's 4N process, a customized 5nm technology. The subsequent Blackwell architecture employs TSMC's 4NP process, an enhanced version of 4N.

While initial industry speculation suggested that Blackwell might adopt a 3nm process to enable more cores or higher clock speeds within similar power usage, it ultimately continued with an improved 4nm process.

Another component in these architectures is the focus on multi-GPU setups: Nvidia realizes that ultra-large AI models might need dozens or hundreds of GPUs working in unison.

So, with Hopper and Blackwell, features like NVLink, NVSwitch (an on-board network connecting GPUs), and even the idea of pairing GPUs with CPU memory (as in the Grace Hopper superchip) all aim to reduce bottlenecks when scaling out.

The Hopper architecture, for instance, introduced NVLink Switch to let up to 256 H100 GPUs communicate in a single cluster at full bandwidth – crucial for supercomputers training frontier AI models.

In simpler terms, Hopper is currently the gold standard for AI chips – everything from its math cores to its memory is tuned for AI throughput. As Q1 2025, this quarter, that baton has been passed to Blackwell.

Nvidia's Blackwell architecture significantly outperforms its predecessor, Hopper, in several key areas:

Performance: Blackwell delivers up to 2.5 times the AI training performance and up to 30 times the inference performance compared to Hopper.

Efficiency: The architecture introduces a dedicated decompression engine, accelerating data processing by up to 800 GB/s, making it six times faster than Hopper when handling large datasets.

Advanced Features: Blackwell incorporates a second-generation Transformer Engine with new micro-tensor scaling techniques for FP4 precision, doubling the performance of next-generation AI models while maintaining high accuracy.

This cadence of new architectures ensures Nvidia keeps its performance lead.

With the release of Nvidia's Blackwell architecture, AI researchers and companies are actively upgrading their infrastructure to leverage its significant performance enhancements.

Major tech companies like Amazon, Microsoft, Google, Meta, and OpenAI are integrating Blackwell GPUs into their AI infrastructure to power various applications, from AI-driven search and social media algorithms to advanced cloud services. home.saxo

Nvidia’s challenge (which they’ve so far met) is to keep delivering generational leaps that justify those upgrades.

Software Moat

Easy Button: Nvidia’s software moat, anchored by its CUDA platform, is like a super secret recipe that makes its chips work better than ever.

Over years of dedicated development, entire ecosystems, libraries, and millions of lines of code have been built around CUDA.

Companies have invested heavily—both in time (often a decade) and resources—to optimize their applications for Nvidia’s platform, making it prohibitively expensive to switch away.

This deep, entrenched software foundation reinforces Nvidia’s hardware moat by ensuring that each new generation of chips is automatically supported by an established and growing body of software, thereby keeping customers firmly on Nvidia’s side (Source) (Source).

Nvidia’s CUDA, introduced in 2006, is a parallel computing platform and API that unlocks the full power of Nvidia GPUs for tasks far beyond graphics rendering.

Over time, it has evolved into a robust ecosystem of libraries, tools, and frameworks designed to extract maximum performance from Nvidia hardware.

Millions of developers have built machine learning, scientific computing, and AI applications on CUDA—investing in millions of lines of optimized code that make switching to another platform prohibitively expensive.

This deep integration between hardware and software not only boosts performance through features like Tensor Cores and optimized memory handling but also reinforces Nvidia’s market leadership.

This has created a virtuous cycle where more developers and enterprises use Nvidia, which encourages more software and tooling to cater to Nvidia, further reinforcing its lead.

Competitors such as AMD and Intel have created their own software platforms (ROCm and oneAPI), yet none have reached the same level of industry adoption or developer support.

In essence, Nvidia’s software moat makes its hardware far more effective and entrenched in the industry, ensuring that its cutting-edge GPUs remain the preferred choice for AI and high-performance computing workloads (Source) (Source).

Now you may ask…

I'm a Developer. I don't use CUDA, do I?

No—you likely don’t write CUDA code yourself.

In fact, most developers never even think about CUDA. When you're coding in Python, C#, or similar languages, you're usually leveraging libraries and frameworks (like PyTorch or TensorFlow) that handle all the heavy lifting of GPU acceleration behind the scenes.

Think about it this way: I've written code without ever realizing I was riding on the coattails of CUDA.

Even though I don’t write CUDA code myself, every time I run my Python or C# code, I’m benefiting from decades of optimization embedded in these libraries. They’re built on Nvidia’s CUDA platform—a system so refined that billions of lines of code assume they’ll run on Nvidia GPUs.

Consider this: In recent Kaggle Machine Learning & Data Science Surveys, around 80–85% of respondents reported using Python as their primary language for AI and machine learning.

That’s because the entire AI software ecosystem is tuned to Nvidia’s hardware. If you were to switch away from Nvidia GPUs, that very same Python code would run noticeably slower.

Not too long ago, a difference of 10 versus 20 milliseconds might have seemed trivial, but today, even a 5-millisecond delay in AI training or inference can mean the difference between a product that works and one that doesn’t—imagine a model that takes a month to train versus one that takes a year.

This isn’t just a performance edge; it’s an unassailable moat. Developers would immediately notice if libraries or hardware moved away from Nvidia.

That underlying work of CUDA is the secret sauce powering our code, and a shift away from Nvidia would effectively grind a decade of progress to a halt.

In short, while you might just be writing high-level code without ever thinking about CUDA, you’re indirectly reaping the benefits of Nvidia’s relentless performance optimizations and a robust software ecosystem.

And that’s why Nvidia’s moat isn’t just about having a better chip—it’s built on a foundation of billions of lines of production code that rely on CUDA every single day.

Inference vs. Training: How Nvidia Powers AI Workloads

Easy Button: Training is like studying hard for a test, while inference is taking the test. Nvidia’s GPUs process massive data in parallel during training and then efficiently run the model during inference.

Training an AI model and inferencing (running a trained model) are two different jobs – Nvidia GPUs handle both, but the way they’re optimized can differ.

Think of training like studying for a test, and inference like taking the test.

Training is the process where an AI model (say a neural network) learns from a huge dataset, adjusting millions or billions of parameters.

It’s extremely computationally intensive – you have to show the model many examples and calculate how far its guess was from the truth (the error), then tweak weights and do it again, over and over.

This is where GPUs shine: training might involve trillions of operations (like matrix multiplications and additions), which Nvidia’s GPUs perform in parallel very efficiently.

A single training run for a large model might use many GPUs for days or weeks. Nvidia’s flagship data center GPUs (A100, H100, etc.) are built to handle these heavy workloads, with features like robust Tensor Cores for fast math, lots of memory for big batches of data, and high bandwidth to communicate between GPUs (since large-scale training often uses multiple GPUs).

Inference is what happens after training, when the model is deployed to actually do tasks – like an AI model answering your question or identifying objects in a photo.

Inference needs to be fast and efficient, because it might be happening millions of times (think of millions of queries to ChatGPT, or billions of photos scanned on social media).

Each single inference is generally less work than a full training batch, but the challenge is doing them with low latency (quick response) and often under strict power/efficiency constraints (especially if running in a cloud service or an autonomous car, etc.).

Nvidia addresses this in a few ways:

• The same GPU that trained the model can also serve it for inference; high-end GPUs can be partitioned (using MIG on A100/H100) to serve multiple inference jobs at once.

But there are also scenarios where specialized inference chips (like smaller GPUs or ASICs) are used for cost efficiency.

• TensorRT: Nvidia provides software called TensorRT which is an inference optimizer.

It takes a trained neural network and tunes it to run as fast as possible on Nvidia hardware – for example, it might compress some weights, fuse certain operations, or use lower precision calculations where they don’t hurt accuracy.

By doing this, TensorRT can often double or more the inference throughput compared to running a raw model, by leveraging GPU features fully.

• INT8/FP8 precision: During inference, you can often use lower numerical precision than in training.

Nvidia GPUs support 8-bit integers (INT8) or even 4-bit in newest cards, which allow them to do more operations per second.

The trade-off is tiny losses in model accuracy, but many models can handle quantization (conversion to lower precision) without significant accuracy drop.

This hugely improves inference speed and efficiency.

For instance, an H100 GPU using FP8 can achieve up to 2 petaFLOPs of compute (Source) which is used for high-volume inference of large language models.

• Architecture tweaks: Nvidia’s newer architectures sometimes include specialized inference modes.

Hopper introduced a feature to speed up GANs and transformer model inference by dynamic programming in hardware (useful for things like beam search in language generation).

The GPUs also have scheduling and multi-stream capabilities to handle many small inference jobs concurrently.

In practical terms, if a company deploys an AI service – say a voice assistant – they might use Nvidia A100/H100 GPUs in their server back-end to handle the speech recognition and response generation for many users at once.

Those GPUs are running inference on the trained models. Nvidia’s GPUs are very good at this, but they do face competition here from more specialized chips (since inference at scale is about cost-per-query).

Nvidia’s response has been to make their GPUs and software extremely flexible: the same GPU that can train a giant model can be re-partitioned to handle thousands of inference requests, which is convenient.

Additionally, Nvidia offers different GPU lines, like the Nvidia L4 (a smaller GPU aimed at efficient inference for video and AI in the cloud) and even has software stacks like NVIDIA Triton Server to help manage inference serving.

In summary, training = heavy learning phase (GPU-intensive, long runs, lots of math) and inference = execution phase (many quick operations, need efficiency).

Nvidia’s GPUs are used in both phases: massive GPU clusters for training, and then often those or smaller GPUs for serving.

Thanks to optimizations like TensorRT and precision scaling, Nvidia has ensured its hardware is top-notch at squeezing maximum inference performance, which is why you’ll find Nvidia GPUs not only in AI research labs but also behind AI-powered services delivering results to your phone or laptop in real-time.

Beyond GPUs: Nvidia’s Expansion into DPUs and AI Chips

Easy Button: Nvidia isn’t just about GPUs anymore. As AI and cloud computing needs grow, Nvidia has expanded into other types of processors to handle tasks that GPUs or CPUs aren’t best at. Two big areas are DPUs (Data Processing Units) and Nvidia’s own custom CPU (Grace) for AI/datacenter.

• BlueField DPUs: Imagine in a data center, aside from computing (CPU/GPU) there’s a lot of data moving around: networking (sending data between servers), storage (reading/writing data to disks), security checks (firewalls, encryption).

Traditionally, CPUs handle a lot of that overhead, which can bog them down when they’re also trying to run applications.

Nvidia’s answer is the BlueField DPU, which is essentially a smart network interface card with its own multi-core processor (Arm-based) and specialized hardware to offload those data-moving tasks.

A DPU is like a traffic cop + handyman for the server: it manages network traffic, encryption, storage access, etc., so the CPU is freed up to run the main application. BlueField DPUs can do things like encrypt data on the fly, enforce security rules, or even run virtualization tasks – all on the NIC (Network Interface Card) itself, at wire speed (Source) (Source).

This is super useful in cloud data centers where efficiency and security isolation are paramount.

For example, with DPUs, cloud providers can isolate each tenant’s network and storage workloads onto the DPU, meaning the main CPU is fully devoted to the user’s application and can deliver more consistent performance.

By offloading and accelerating data-centric tasks, DPUs improve overall data center throughput and reduce CPU loads. Nvidia’s BlueField-2 and BlueField-3 DPUs are already being adopted in high-end servers; they pair well with GPU servers especially, because when you have a cluster of GPUs exchanging tons of data, a DPU can manage that communication more efficiently than a CPU could.

In an “AI factory” (Nvidia’s term for AI data centers), DPUs form the third pillar of computing (Source) (Source) CPU for general logic, GPU for heavy parallel compute, and DPU for data movement and orchestration.

• Grace CPU: Nvidia even designed its own CPU, named Grace, to pair with GPUs in data centers.

Why would Nvidia make a CPU when companies like Intel and AMD dominate CPUs? The reason is specific needs of AI and high-performance computing.

Grace is an Arm-based server CPU optimized for extremely high memory bandwidth and energy efficiency for feeding data to GPUs.

One of the bottlenecks in GPU computing can be the CPU not delivering data fast enough, or not handling certain tasks well (like massive data preprocessing).

Grace attempts to solve that by being a beast in memory access: a Grace CPU can support an enormous amount of memory (up to 1 terabyte/second bandwidth and up to 960 GB capacity in its superchip form. (Source) (Source).

Nvidia connects Grace CPUs to their GPUs via a special high-speed link (NVLink-C2C) so that the CPU and GPU act almost like a single unit, sharing memory coherently at 900 GB/s (Source).

This is far faster than traditional PCIe connections between CPU and GPU. The Grace CPU is based on Arm’s Neoverse cores (144 cores in a dual-chip module) and is designed for scenarios like giant AI models or scientific simulations where you need both strong CPU and GPU performance.

By providing its own CPU, Nvidia offers a more complete platform: for instance, the Grace Hopper Superchip packages a Grace CPU and a Hopper GPU together on one board, optimized to work in tandem.

In AI training servers, this can mean better performance-per-watt and the ability to handle very large datasets in memory.

Nvidia’s move into DPUs and CPUs represents a strategy to own more of the data center’s “brain”.

Instead of just selling GPUs and relying on others for the rest, Nvidia can now sell a combination of CPU+GPU+DPU – covering general compute, accelerated compute, and data movement.

This synergy can yield big gains: for example, using a Grace CPU with an H100 GPU might train certain large language models 10× faster than using a traditional x86 CPU with the GPU, according to Nvidia’s projections (Source).

And DPUs can reduce CPU needs, potentially saving money for cloud operators or allowing more of the CPU budget to go into running AI models.

In simpler terms: Nvidia looked at the data center and said, “We can improve the other parts too, not just the number-crunching part.”

BlueField DPUs make sure data gets where it needs to go quickly and securely, and Grace CPUs ensure the brains feeding the GPUs are just as fast and scalable as the GPUs themselves.

Together, this trio (CPU+GPU+DPU all designed by Nvidia) can make an AI supercomputer more efficient and easier to build – all using Nvidia’s ecosystem.

It’s a bold expansion from being “the GPU company” to being a broader data center platform company.

Competitive Technical Analysis

How Nvidia Stacks Up Against AVGO, AMD, and Amazon’s In-House Chips

Easy Button: Nvidia’s success has attracted a lot of competitors, each coming from different angles – traditional chip rivals like AMD, other tech giants like Amazon and Google making their own chips, and specialty players like Broadcom designing custom silicon.

Here’s a look at how Nvidia compares:

• Vs. Broadcom (AVGO) custom chips: Broadcom isn’t typically known for AI chips the way Nvidia is, but they have a significant business designing custom ASICs for very large customers (the “hyperscalers” – think Google, Meta, Amazon, etc.).

Broadcom basically says to these cloud giants: “Tell us what you need, and we’ll design a chip for that purpose.”

Recently, Broadcom revealed it secured deals with five major hyperscalers to develop custom AI processors (Source) (Source).

What kind of chips are these?

They could be specialized accelerators for specific AI workloads, possibly similar to Google’s TPU or an inference processor that’s highly optimized for those companies’ software.

Broadcom’s custom AI chips might not be sold broadly on the market (they are likely exclusive to the client who commissioned them), so they don’t directly threaten Nvidia’s broad customer base, but they do mean that some of the largest buyers of Nvidia GPUs are investing in alternatives.

The scale is huge – there are talks of hyperscalers wanting millions of these accelerators in the coming years (Source).

However, designing a successful AI ASIC is very hard (ask Intel, which bought two AI chip startups – Nervana and Habana – with mixed results).

Nvidia still wins on flexibility and ecosystem: an ASIC might beat a GPU on a narrow task, but a GPU can do many tasks (training, various model types, etc.) and has the full CUDA software stack.

Broadcom’s approach is more of a partnership model: they become the silent architect of bespoke chips for the big players.

In summary, Broadcom can nibble at the edges of Nvidia’s market by siphoning off a Google or Meta to use custom silicon for some workloads, but Broadcom isn’t competing for the wide developer community – it’s a behind-the-scenes threat that could reduce Nvidia’s largest customers’ dependency on GPUs.

• Vs. AMD (MI300 and other GPUs): AMD is Nvidia’s most direct competitor in GPUs.

They also make data center GPUs (the Radeon Instinct/MI series) and have an open software stack (ROCm) to compete with CUDA.

AMD’s latest flagship is the MI300 series, which comes in variants like MI300A (which combines CPU and GPU in one package) and MI300X (GPU with massive memory).

Technically, AMD’s MI300X is impressive: it has 192 GB of HBM3 memory (Nvidia’s H100 has 80 GB, or 96 GB in the special H100 NVL version) – that huge memory helps to hold very large AI models entirely on one card (Source).

MI300X also touts higher memory bandwidth (~5.3 TB/s) than Nvidia’s H100 (~3.35 TB/s), which can give it an edge in feeding data quickly (Source) (Source).

Early benchmarking by third parties suggests MI300X can outperform H100 in certain large-language-model inference tasks, especially at batch sizes where its extra memory keeps the GPU fully utilized (Source) (Source).

AMD also has the MI250 (previous gen) deployed in some top supercomputers (like Frontier, the first exascale system).

With MI300, AMD is blending CPUs and GPUs: MI300A pairs 24 Zen4 CPU cores with a CDNA3 GPU and lots of HBM in one module – ideal for HPC workloads that need tight CPU-GPU coupling.

In pure compute, MI300 is certainly a capable rival to Nvidia’s offerings.

Vs. Amazon’s in-house Trainium/Inferentia: Amazon Web Services (AWS) has developed its own family of AI chips: Trainium for training and Inferentia for inference, designed by its Annapurna Labs unit.

AWS’s motivation is clear – they spend billions on Nvidia GPUs for their cloud, and if they can use in-house chips, they could lower costs for themselves and customers (and control their supply chain better).

How do these chips compare? The latest Trainium2 (announced in 2023) is a dense 5nm chip built specifically for AI training.

An AWS Trainium2 chip has up to 1.3 petaFLOPS of FP8 performance and comes with 96 GB HBM memory, whereas Nvidia’s H100 has about 2.0 petaFLOPS of FP8 and 80 GB memory (or 94 GB in special configs) (Source).

So on raw spec, a single Trainium2 is in the ballpark of an H100, slightly trailing in compute and bandwidth.

AWS bundles Trainium chips into systems called Trn1 UltraClusters – for example, 64 Trainium2 chips across multiple boards, all interconnected.

They claim that in AWS data centers, a Trainium2 instance can offer 30–40% better price-performance than an equivalent Nvidia GPU-based instance (Source).

That’s a key metric: Amazon might accept slightly lower raw performance if they can make it much cheaper for the same work. And since they control the full stack, they can optimize their software (AWS Neuron SDK) heavily for these chips.

Vs. other in-house efforts (Google TPU, etc.): Google has its TPUs (Tensor Processing Units), now on generation 4 (with TPUv5 likely in development).

Google’s TPUv4 is roughly comparable to Nvidia’s A100 in many tasks and has been used to train large models like PaLM.

Google uses TPUs heavily for internal products and also offers them on Google Cloud. The TPU is an ASIC specifically optimized for matrix operations with Google’s TensorFlow framework, and they’ve shown excellent performance per dollar for large-scale training.

However, outside Google’s ecosystem, TPUs are not widely used (TensorFlow users in Google Cloud might use them, but the world of PyTorch largely sticks to GPUs).

It’s a similar story with Meta – Meta is developing its own AI chips (the MTIA for inference, and a planned training chip) (Source) (Source) to reduce future reliance on Nvidia.

These in-house chips are threats in that each big tech firm wants to shave off some portion of Nvidia usage for cost or strategic reasons.

If Google uses TPUs for 80% of its AI and only 20% Nvidia, that’s a lot of business Nvidia lost. Ditto for Amazon and Meta if their efforts succeed.

So far, Nvidia has managed to remain indispensable: even those companies still buy plenty of Nvidia hardware (for versatility, for GPU-specific tasks, or simply because their demand is so high they need all the chips they can get).

Nvidia also counters with software dominance – many of these custom chips need to prove they can support the vast array of AI models and workloads out there.

Nvidia’s GPUs can run almost anything (vision, language, recommendation, reinforcement learning, etc.), often with off-the-shelf libraries.

A custom chip might excel at one or two things (like Google TPUs are great for transformer models in TensorFlow, but less flexible for other stuff).

In summary, Nvidia’s competitive moat stands on a three-legged stool: cutting-edge hardware performance, a rich and sticky software ecosystem, and an economy of scale (serving many industries, which funds their huge R&D).

Competitors are attacking each leg – AMD with hardware, cloud providers with scale of their own, and some open-source efforts with software.

Yet, Nvidia’s lead, especially in the developer mindshare and software, gives it a resilient position.

Even if a rival chip is theoretically faster or cheaper, customers consider the total cost of adoption – rewriting software, retraining teams – which often keeps them on team Nvidia.

Nvidia isn’t complacent either: by pushing into CPUs and DPUs, and continuously updating CUDA (including support for open standards like Python and potentially CUDA on RISC-V in the future), they are trying to stay ahead of the pack.

One can draw an analogy: Nvidia in AI chips is like the x86 Intel in PCs of old – the standard platform.

AMD might be like… AMD in PCs (an alternative x86), while others are like specialized appliances.

Time will tell if these new challengers significantly erode Nvidia’s dominance or just coexist at the edges.

For now, Nvidia remains the benchmark to beat in AI acceleration.

DeepSeek and Future AI Infrastructure Trends

Potential Impacts of DeepSeek on Nvidia

Easy Button: “DeepSeek” refers to a surprising new development in AI – for instance, a hypothetical (or in recent news, a real) AI model from China called DeepSeek that reportedly matched the performance of top Western AI models like GPT-4 but with far less computing power needed.

Think of it as a smarter training method or more efficient AI design that doesn’t require as many brute-force calculations.

If AI breakthroughs like DeepSeek continue, they could change the game for companies like Nvidia. Let’s explore positive vs negative scenarios:

• Positive scenario (for Nvidia): AI demand keeps growing explosively.

Models like ChatGPT, image generators, etc., get integrated into every app and service, and new models (for science, medicine, finance) keep coming.

Even if some models become more efficient, the overall usage skyrockets – e.g., millions of people using AI features billions of times a day.

This means data centers need more total compute to serve all those queries. Also, as models get efficient, researchers tend to just build bigger models to achieve even better results (using the freed-up compute to increase model size until they again max out hardware).

In this scenario, Nvidia benefits because it sells the shovels for this gold rush.

Every big cloud and internet company will keep buying Nvidia GPUs by the truckload to both train next-gen models and deploy them at scale.

We’ve already seen some of this: after ChatGPT’s success, companies massively increased their AI capital expenditures, which led to Nvidia’s data center revenues booming over 3× in a year (Source).

If AI truly becomes as ubiquitous as electricity in services, Nvidia stands to be one of the primary suppliers of the “engines” that run AI.

Another angle: new AI applications like autonomous vehicles, robotics, IoT, etc., could create new markets for Nvidia’s hardware at the edge (not just in data centers).

For example, each autonomous car might need a powerful Nvidia computer onboard (Nvidia already has the DRIVE platform for cars).

Robotics in warehouses and factories might use Nvidia Jetson modules for AI.

So an AI-positive future with heavy adoption everywhere could see Nvidia selling not just to clouds but to every industry (retail, health, etc.) embedding AI.

In short, rising AI tide lifts Nvidia’s boat – even if some efficiency gains happen, the sheer volume of AI usage requires more infrastructure, and Nvidia’s scalable solutions (from small modules to giant H100 clusters) will be in high demand.

• Negative scenario (for Nvidia): What if a paradigm shift in AI reduces the need for so many GPUs?

If AI researchers find algorithmic innovations that make training dramatically more efficient (like better learning algorithms, more efficient architectures, or techniques like federated learning, etc.), then you wouldn’t need to throw as much brute-force compute at the problem.

Companies could achieve the same AI capabilities with fewer GPUs. Another possibility is specialized hardware gets good enough that GPUs are less needed.

For instance, what if someone invents an optical AI computer or a quantum accelerator that can do certain AI computations with far less energy?

Or simply, what if competitors’ ASICs (like Google’s TPU or some new startup’s chip) become so efficient that Nvidia’s advantage is eroded?

In any of these cases, Nvidia could face a scenario where the total demand for GPUs slows down even as AI progresses, because people find ways to “do more with less.”

The DeepSeek model led some analysts to speculate that compute might become less of a barrier (Source) – if true, big tech firms might reconsider massive spending on high-end chips if they can get by with smaller models or cheaper hardware.

In the extreme, imagine AI research hits a point of diminishing returns with scale (some have argued we might hit an “AI efficiency” wall where bigger models stop giving gains proportional to cost). If the frenzy for ever-larger GPU clusters subsides, Nvidia’s growth would also temper.

Another negative angle: open-source AI models and efficient algorithms could let smaller companies run powerful AI on modest hardware.

If a company doesn’t need to rent a whole GPU farm from AWS because a lightweight model on a few servers can do the job, that again cuts into Nvidia’s potential market.

We’re seeing hints: some open models can run on a single high-end PC GPU (like LLaMA 2 7B, etc. on a 16GB card) for tasks that people assumed required much larger setups.

However, it’s worth noting Nvidia can often adapt: if efficiency gains come from algorithmic improvements, researchers may still use the saved compute to try something else that uses GPUs.

And if new hardware paradigms emerge, Nvidia has the resources to invest in them (they could build optical chips, they’ve looked at things like DPUs as mentioned, etc.).

But clearly, Nvidia’s biggest risk is the field of AI finding a way not to need Nvidia as much. DeepSeek’s case showed that a clever approach might cut training cost by an order of magnitude – if that becomes standard, the “arms race” of buying GPUs could cool off.

CapEx Spending of META, MSFT, and GOOGL

Easy Button: The big tech companies (Meta/Facebook, Microsoft, Google, Amazon) are pouring massive money into building AI infrastructure – this is a double-edged sword for Nvidia.

On one hand, a lot of that money goes into buying Nvidia chips (good for Nvidia); on the other, some of it goes into developing alternatives or in-house solutions (potentially reducing future dependence on Nvidia).

First, let’s get a sense of scale: Meta (Facebook’s parent) announced it would spend an astonishing $60–65 billion in 2024 on capital expenditures, largely to bolster its AI capacity (Source).

This is more than double what it spent in 2023.

Meta is building huge data centers (they mentioned a new 2 GW data center dedicated largely to AI) and aims to have over 1.3 million Nvidia GPUs in place by end of 2025 to power its AI (things like their recommendation systems, content ranking, and future generative AI features) (Source) Meta is currently one of Nvidia’s top customers for AI chips (Source).

Microsoft likewise is on an AI spending spree, mainly because of its partnership with OpenAI and integration of AI into Azure and products like Bing, Office, etc. Microsoft’s capex is projected around $80 billion for its fiscal 2025 (Source) – a huge chunk of which is for data centers full of AI hardware.

They have been buying Nvidia GPUs (reportedly even leasing entire cargo jets to get H100s delivered), and also collaborating with AMD on AI chips (the rumored Athena project) to diversify.

Microsoft spending ~30% of its revenue on capex is unprecedented (for comparison, historically these companies spent maybe 10-15% of revenue on capex) (Source) – it underscores how critical AI is strategically.

In its latest earnings call in 2-4-2025, Google (Alphabet) said it plans about $75 billion capex in 2025, up from $52B in 2024 (Source) and consensus estimates for $59B in 2025.

Google’s spending is partly on their own TPU pods and data center infrastructure, but they also buy Nvidia GPUs for certain workloads (and possibly for Google Cloud customers who demand GPUs).

Google’s massive spend is good for Broadcom (which helps build Google’s custom TPUs and switching gear) (Source) and still beneficial to Nvidia (Google Cloud offers Nvidia instances, and Google likely uses GPUs for some research like DeepMind or for compatibility with TensorFlow/PyTorch outside of TPU-optimized stuff).

Finally, Amazon (AMZN) announced an enormous $100B in CapEx, the majority of which will go to AI infrastructure.

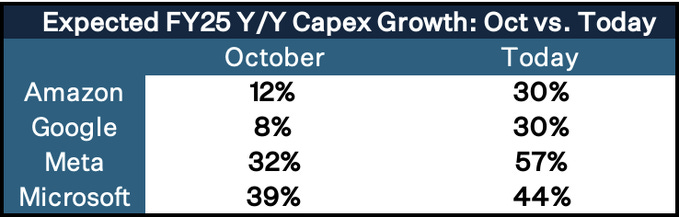

Here is a table put together by Gene Munster on the amount of CapEx spend that was expected for 2025 as of Oct 2024, and the amount actually guided to in February of this year by each of the hyperscalers:

The key point is, all these players are in an “arms race” to deploy AI compute, which in the short term means heavy purchases of whatever is the best available hardware – mostly Nvidia GPUs.

This surge in capex from Meta, Microsoft, Google (and others) has turbocharged Nvidia’s sales in the past year.

It’s like each company is scrambling to build the biggest AI factory, and Nvidia is selling them the machinery.

That’s a major positive driver for Nvidia’s business – indeed, Nvidia’s stock and revenue soared in 2023 largely because these companies unexpectedly ramped their orders (for example, one quarter Nvidia gave a forecast more than 50% above estimates, implying an enormous backlog of orders from these cloud giants).

Conclusion: Nvidia’s Future in AI and Compute

Easy Button: Nvidia stands today as a linchpin of the AI industry – its GPUs and software power the majority of AI research and deployments and displacing that software to hardware moat is going to be nearly impossible.

The company’s strengths are clear: best-in-class hardware, a rich software ecosystem (CUDA) that keeps developers locked in and productive, and an ability to keep innovating across the stack (GPUs, specialized cores, networking, now even CPUs and DPUs).

This has created a virtuous cycle where more developers and enterprises use Nvidia, which encourages more software and tooling to cater to Nvidia, further reinforcing its lead.

Financially, Nvidia has transformed in a few short years from relying on gaming graphics to being driven by data center AI chip sales – a higher-margin, fast-growing market.

It has no real equals in the AI training space at the moment – competitors exist, but none have dethroned the king yet.

However, Nvidia’s future isn’t without risks and challenges.

The competitive landscape is intensifying: AMD is finally fielding competitive GPUs (and leveraging the CPU+GPU angle where it has an advantage), and Big Tech players (Google, Amazon, Meta, Microsoft) are investing heavily in alternative silicon.

There’s also the possibility of a shift in AI computation needs – if efficiency breakthroughs (like the DeepSeek model example) reduce the demand for brute-force compute, or if software frameworks shift to be more hardware-agnostic or favor open-standard accelerators, Nvidia could feel a pinch.

Nvidia’s stock price and valuation already assume a trajectory of dominance in AI; to live up to that, Nvidia will have to continuously evolve. That likely means:

• Keeping its GPUs at the bleeding edge of performance and efficiency so that even internal projects at Google/Meta find it hard not to use them.

Blackwell and subsequent architectures will be crucial – they must deliver noticeable gains that justify the ecosystem sticking with Nvidia.

• Strengthening the CUDA moat – making development on Nvidia so convenient (through libraries, AI model repositories, partnerships, cloud services like NVIDIA AI Enterprise suite) that using an alternative is more pain than it’s worth.

This might include embracing open-source more, or ensuring key AI frameworks run best on Nvidia.

• Expanding into new markets: For example, pushing more into automotive (self-driving), medical AI, edge AI.

Nvidia is already doing this (Drive PX for cars, Clara for healthcare, Jetson for edge robotics).

If it can become as indispensable in those emerging fields as it is in data center AI, that diversifies its success.

• Addressing cost: Nvidia might need to offer solutions for different tiers – not every user can afford an H100.

By offering lower-cost inference GPUs (like the L4) or even licensing IP (like giving car makers an optimized chip design), Nvidia can capture volume markets too, not just the high end.

The cloud providers will also appreciate any improvement in price/performance, whether through better chips or better bulk pricing agreements.

• Collaboration and integration: Nvidia’s Grace CPU and BlueField DPU moves show it’s trying to integrate vertically.

If those succeed, Nvidia can sell a platform, not just a chip. For example, an “AI server in a box” solution that includes Grace (CPU) + Hopper/Blackwell (GPU) + BlueField (DPU) + NVSwitch, etc., all tuned to work together.

This could make it even easier for customers to adopt Nvidia at scale (because instead of assembling parts, they get a cohesive package).

However, in the fast-evolving tech landscape, maintaining and deepening its moat will require relentless innovation and strategic expansion.

Thanks for reading, friends.

The author has no position in NVDA at the time of this writing.

Please read the legal disclaimers below and, as always, remember: we are not making a recommendation or soliciting the sale or purchase of any security ever. We are not licensed to do so, and we wouldn’t do it even if we were. I’m sharing my opinions.

Legal

The information contained on this site is provided for general informational purposes, as a convenience to the readers. The materials are not a substitute for obtaining professional advice from a qualified person, firm or corporation. Consult the appropriate professional advisor for more complete and current information. Capital Market Laboratories (“The Company”) does not engage in rendering any legal or professional services by placing these general informational materials on this website.

The Company specifically disclaims any liability, whether based in contract, tort, strict liability or otherwise, for any direct, indirect, incidental, consequential, or special damages arising out of or in any way connected with access to or use of the site, even if we have been advised of the possibility of such damages, including liability in connection with mistakes or omissions in, or delays in transmission of, information to or from the user, interruptions in telecommunications connections to the site or viruses.

The Company makes no representations or warranties about the accuracy or completeness of the information contained on this website. Any links provided to other server sites are offered as a matter of convenience and in no way are meant to imply that The Company endorses, sponsors, promotes or is affiliated with the owners of or participants in those sites, or endorse any information contained on those sites, unless expressly stated.